Cern unveils Big Bang number-cruncher

The European Organisation for Nuclear Research (Cern) on Friday unveiled "the Grid", a computer network allowing scientists to crunch data on its Big Bang experiment.

Les Robertson, project manager of the Worldwide LHC Computing Grid, told swissinfo why the system that links more than 100,000 processors at 140 institutes in 33 countries could herald the next leap forward in computer technology.

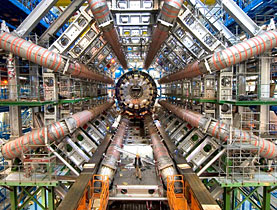

Scientists at Cern, where the worldwide web was invented, created the SFr780 million ($690 million) Grid because they realised that a single computer centre would not be able to cope with the colossal amounts of data flowing from the Large Hadron Collider (LHC), the world’s most powerful particle accelerator.

The LHC experiment involves firing beams of protons in opposite directions around a 27-kilometre tunnel buried 100 metres below the French-Swiss border, on the outskirts of Geneva.

The beams are then smashed together in four bus-sized detector chambers, creating showers of new particles that can be analysed by 7,000 scientists linked to the computer network.

Scientists hope to replicate the conditions one trillionth of a second following the Big Bang 13 billion years ago.

That experiment, which could provide clues about the origins of the universe, began on September 10, but was shut down nine days later because of a helium leak in the LHC tunnel. It is due to restart in spring 2009.

At full capacity, the LHC will produce 600 million proton collisions per second, amounting to 700 megabytes of data per second. In a year, it adds up to 15 million gigabytes of information, the equivalent of over a thousand times more data than is currently available in printed material around the world.

swissinfo: How was the Grid created and can you briefly describe how it works?

Les Robertson: It was basically a question of needing more capacity than we had funding for. We decided it was better for everyone to have a distributed system, which would leave the computer resources at the institutes.

We now have 140 sites; it’s a very democratic facility where large and small sites can plug in.

The idea is to interconnect these sites using conventional networking and the internet, and then on top of this you have layers of software to enable the sites to work together and transfer data between them, enabling work to be scheduled across the sites.

You then have more layers of software, which enable the physicist to see a single service so they only see the resources that belong to their experiment.

Our Grid runs on other infrastructure grids like the Enabling Grids for E-Science in Europe and the Open Science Grid in the US.

swissinfo: How does the Grid differ from the worldwide web and the internet?

L.R.: The internet is the carrier, the connectivity between sites. You can transfer data from anywhere to anywhere if it is connected to the internet.

The web is a set of protocols and services built on top of the internet, which enable you to share data.

The Grid is a way of sharing computing resources. You can store your data and use various computing resources on the Grid. It’s another application on top of the internet.

swissinfo: How do you see the Grid evolving in the future?

L.R.: For high-energy physics the Grid is a way of plugging in resources and enabling them to be used by the collaborations doing the experiments.

But resources may be provided in other ways in the future. The Grid has been developed in such a way that it’s very flexible, so we should be able to plug in other source providers.

We hear a lot of discussion about “cloud computing” – a sort of grid network operated by a single supplier [like Amazon and Google] that sells services on it – whereas the Grid is a very open, democratic thing. But these “clouds” could easily be plugged into the Grid.

We will also see some interesting uses of the Grid. By setting up this highly distributed environment there is now no reason for computing to be in any specific place. So as the energy problem increases, as more computers mean more energy, we will probably see computer centres move to places where there is renewable energy and inexpensive electricity and easy cooling, to places like Iceland or Norway.

What we are expecting is that people will find a lot of novel ways of doing computing – whether it’s people trying out interesting, cheaper, energy-saving technologies or smarter ways of doing analysis.

swissinfo: Has the postponement of the LHC experiment had an impact on the Grid and the data-crunching?

L.R.: The Grid has been in operation for a long time, slowly building up to a level where it can support the data flow. For a number of years it has been generating and processing simulated data. This is not just to exercise the Grid but to understand how the detectors work and to prepare for the physics.

More recently as the detectors were installed they’ve been collecting data from cosmic rays, so we actually have real data flowing through the Grid. If there is a delay now, this won’t really have an impact on the Grid as it’s ready and whenever the data comes we’ll be able to process it.

swissinfo, Simon Bradley in Geneva

The LHC tunnel is 27km long and runs between Lake Geneva and the Jura mountain range.

In the LHC, high-energy protons in two counter-rotating beams will be smashed together to search for exotic particles.

The beams contain billions of protons. Travelling just under the speed of light, they are guided by thousands of superconducting magnets.

The beams usually move through two vacuum pipes, but at four points they collide in the hearts of the main experiments, known by their acronyms: ALICE, ATLAS, CMS, and LHCb.

The detectors could see up to 600 million collision events per second, with the experiments scouring the data for signs of extremely rare events such as the creation of the so-called God particle, the yet-to-be-discovered Higgs boson.

Transfer speeds for the 7,000 scientists within Cern’s network are 10,000 times faster than a traditional broadband connection.

The amounts of data involved in the largest scientific experiment ever conducted are hard to comprehend.

At full capacity the LHC will produce 600 million proton collisions per second, producing data 40 million times per second.

These will be filtered down in the four massive subterranean detectors, the largest of which is the size of a five-storey building, to 100 collisions of interest per second.

The data flow will be about 700 megabytes per second or 15 million gigabytes a year for 10 to 15 years, which is enough to fill three million DVDs a year or create a tower of CDs more than twice as high as Mount Everest.

In compliance with the JTI standards

More: SWI swissinfo.ch certified by the Journalism Trust Initiative

You can find an overview of ongoing debates with our journalists here . Please join us!

If you want to start a conversation about a topic raised in this article or want to report factual errors, email us at english@swissinfo.ch.