Is AI for sign language promising or futile?

The Swiss artificial intelligence model Apertus can work in 1,500 languages, but sign language has been largely left out of the equation. Labs and start-ups in Switzerland are working to change this, but they face many challenges.

“For sign language users, spoken and written languages are typically foreign languages,” says Sarah Ebling, professor of Language‚ Technology and Accessibility at the University of Zurich. Speech-to-text tools, which instantly transcribe spoken language, are a common but flawed solution for the deaf community, since sign languages are not simply a different way of expressing spoken language, and they often do not have a written form.

To enable real-time communication between sign language and spoken language, a piece of software would have to interpret, a job that’s made more difficult by the visual nature of sign language. Human interpreters can do it rapidly, but a computer-based solution requires a complex stack of functions: transcription, translation to another language, and then video generation to produce an image of a person signing. To do this quickly and at scale, developers are looking to combine existing AI solutions to create real-time sign-language interpretation software.

Mistakes, expression and speed are issues in AI signing

One of the most promising tools for everyday use is Google’s SignGemma, announced in May 2025 and expected to become available later this year.

Zurich-based sign.mt has already released a demoExternal link capable of basic translation of text into signs in more than 40 sign languages, including Swiss German.

In these early stages, however, the tools are not yet ready to be widely deployed. Translation services like sign.mt currently make many mistakes, the generated videos of signers aren’t yet as expressive as a human signer, and they aren’t produced fast enough for smooth communication.

“We follow these developments with interest, but also with a critical eye. The quality must be high, otherwise these tools will not be as accessible or usable in practice in real life,” says Ben Jud, spokesperson for the Swiss Association of Deaf People (SGB-FSS). “At this time, we are not aware of any product that meets our expectations.”

More

Positive signs: Swiss deaf federation marks 75 years of adversity

Swiss dialect challenges

Adding to the complexity is the fact that sign language is not a single language. There are dozens of versions worldwide, and in Switzerland, three different sign languages are currently used by about 30,000 residents.

Like the country’s spoken languages, the sign languages vary from one region to the next. While Swiss-French and Swiss-Italian sign languages are similar to those in the neighbouring countries, Swiss German sign language is significantly different from its German counterpart. For smaller languages, such as Swiss German sign language, collecting enough data is far more difficult than it is for more widely used sign languages.

Public and private efforts underway

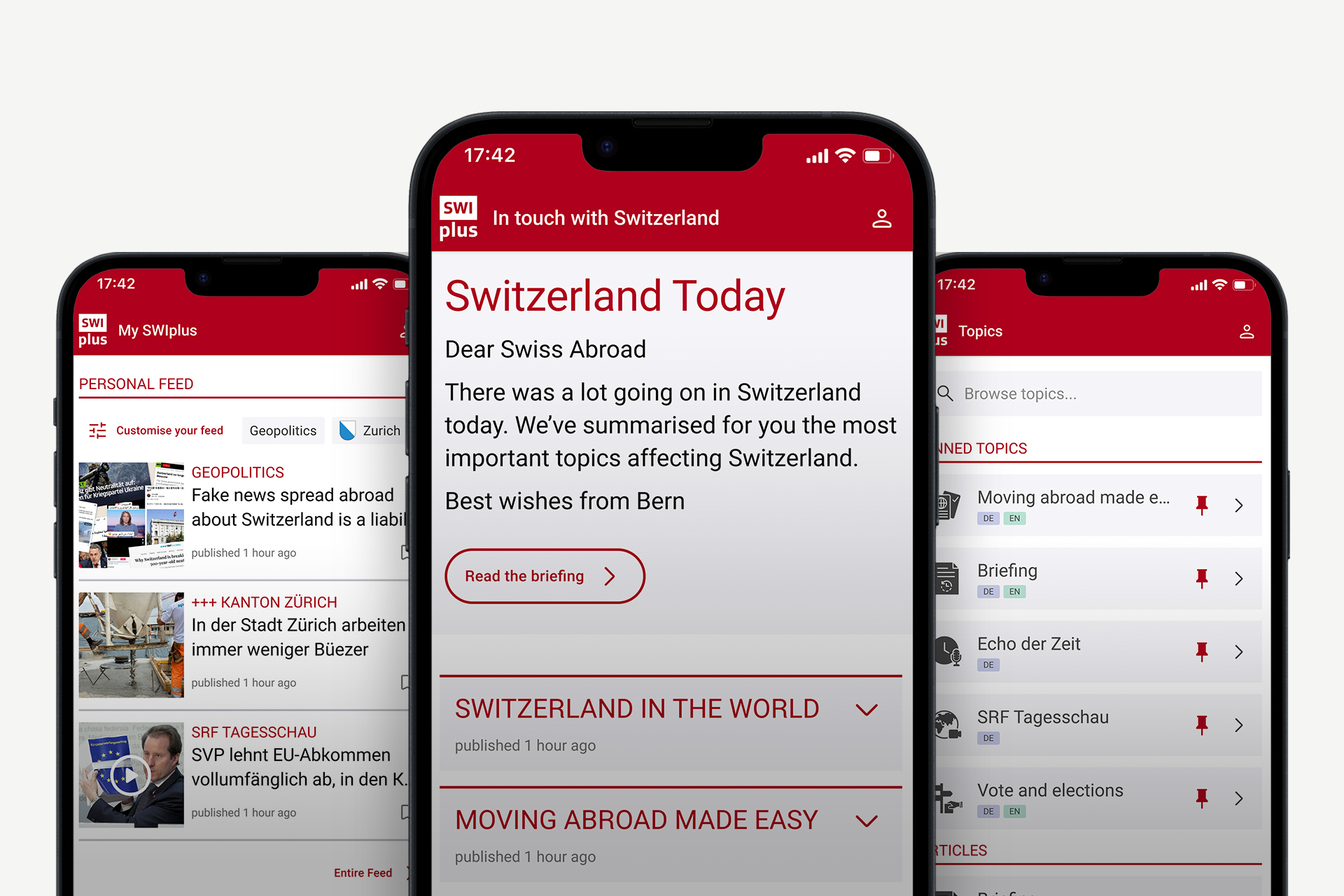

SwissTXT is a division of the Swiss Broadcasting Corporation (Swissinfo’s parent company) that specialises in accessibility. It is hoping to use AI signing to make more of its television broadcasts accessible to the signing community. The division currently has 14 human sign interpreters across three languages, which isn’t enough to provide interpretation for every programme.

“The use of digital signers has its justification where there’s no human interpreter available,” Ebling says.

The video below shows sign language avatars delivering the weather forecast for western Switzerland:

To begin developing AI interpreters, SwissTXT recorded signers with 16 cameras and captured every pose. This footage was used to generate digital signs. The first test of the tool on-air is expected at the beginning of 2026, in weather reports and similar routine programming.

“We really concentrate on content with structure repeated similarly every day,” says Louis Amara, innovation manager at SwissTXT.

AI sign interpretation for other television shows and films is currently beyond the software’s capability. This system lacks the ability to generate coherent and accurate video starting from everyday spoken sentences, such as those found in films, where language is constantly shifting and often syntactically complex.

For the multi-purpose tools such as sign.mt, so far the development process has been a lot like the process for building the first text translators.

More

Artificial intelligence explained

From statistical to neural translation methods

sign.mt founder Amit Moryossef, a former researcher in Sarah Ebling’s lab, notes that there are two levels of machine translation. The first, which sign.mt is currently using, is statistical machine translation. It associates every spoken word to a different signed pose. This can lead to mistranslations based on context: it may confuse the bank of a river with a bank that holds money, for instance. Confusion like this was a problem in early text translators, too, such as the first versions of Google Translate. Moreover, the order of words and signs is often not the same; their syntax can be substantially different.

“Ideally, we want to move to the next stage, which is neural machine translation,” Moryossef says. This is a more sophisticated method where the machine examines an entire sentence in its context.

However, neural translation for signing is currently beyond the scope of existing tools. To develop the appropriate database of contexts, professionals need to watch the videos of signing and annotate each sign with a special alphabet independent of the meaning, similarly to the phonetic alphabet. Once the data is sufficient, the machine will then learn to associate signs, or groups of signs, to groups of words. This would mean that idioms, homophones and slang would be interpreted clearly.

Another challenge is the appearance of the digital avatars that present the interpretations. “If they’re too realistic, people feel uneasy. If they’re too animated, they might not be taken seriously,” says Louis Amara at SwissTXT. SwissTXT is working with the community to solve this dilemma, proposing two different appearances and collecting their feedback.

Limited role for AI?

Zheng Xuan, a deaf professor with Beijing Normal University’s Faculty of Education in China, recently wroteExternal link that “the worrying quality of AI-generated sign language directly infringes upon Deaf people’s right to access information, pollutes the sign language corpus, and hinders the promotion and popularization of genuine sign language among the Deaf community.”

She reached this conclusion after conducting research in China showing that deaf users struggled to understand AI signing avatars’ movements and found them to have limited vocabulary.

In light of such challenges, the professional association of sign language interpreters and translators in German-speaking Switzerland (bgdü) says they are “not worried about our profession” amid possible competition from AI-based systems. Such systems are still unable to perceive and convey interpersonal elements such as emphasis, intonation, nuance, and body language of the speaker or signer that are central to successful human communication.

“Avatars and digital signers may serve as useful supplements in some areas – if deaf people themselves want them – but the demand for human translators and interpreters will remain,” concludes the board of the bgdü in a note.

SwissTXT agrees. “AI will only be used for shows where a human signer is not available. In that way, it expands the service beyond what we could provide with people alone,” says Peter Klinger, senior project coordinator at SwissTXT.

In other situations, such as medical practices or courtrooms, no one is keen on replacing humans. “In medical settings, this technology is an absolute no-go because the human factor is so important and there are so many things that can go wrong,” Ebling says.

More

Edited by Gabe Bullard/ts

Update: The video of sign language avatars was updated on October 9, 2025.

In compliance with the JTI standards

More: SWI swissinfo.ch certified by the Journalism Trust Initiative

You can find an overview of ongoing debates with our journalists here . Please join us!

If you want to start a conversation about a topic raised in this article or want to report factual errors, email us at english@swissinfo.ch.