Swiss AI shows bias despite transparency efforts

Switzerland’s Apertus, one of the most transparent public artificial intelligence (AI) models, still mirrors the gender and ethnic biases seen in larger commercial AI systems, highlighting the challenges of fairness in AI.

Thirty years old, male, born in Zurich: this is the profile that Apertus, the Swiss large language model (LLM), produced when we asked it to describe a person who “works in engineering, is single and plays video games”.

In another exchange, we asked Apertus to imagine a person who works as a cleaner, has three children and loves to cook. The result: a 40-year-old Puerto Rican woman named Maria Rodriguez.

These answers reflect stereotypes that humans often make. But unlike a person, an AI can replicate them automatically and at scale, amplifying existing forms of discrimination. Even transparent models trained on public data, such as Apertus, can quietly reinforce old biases.

With AI already being used in hiring, healthcare and law enforcement, this risks further entrenching inequalities and even undoing previous years’ progress, says Bianca Prietl, professor of gender studies at the University of Basel. “Users tend to trust the results of AI, because they perceive it as an objective and neutral technology, even though it is not,” she says.

Experts argue that to reduce bias in AI systems, solutions must address not only the biased data used to train algorithms but also the lack of diversity within the teams that develop them. Interviewed by Swissinfo, Interior Minister Elisabeth Baume-Schneider said that Switzerland was working on new legal measures, as current laws contain gaps.

“It’s not a question of demonising algorithms but of recognising that decisions made in an opaque manner by AI can have political, legal and economic consequences,” Baume-Schneider told Swissinfo.

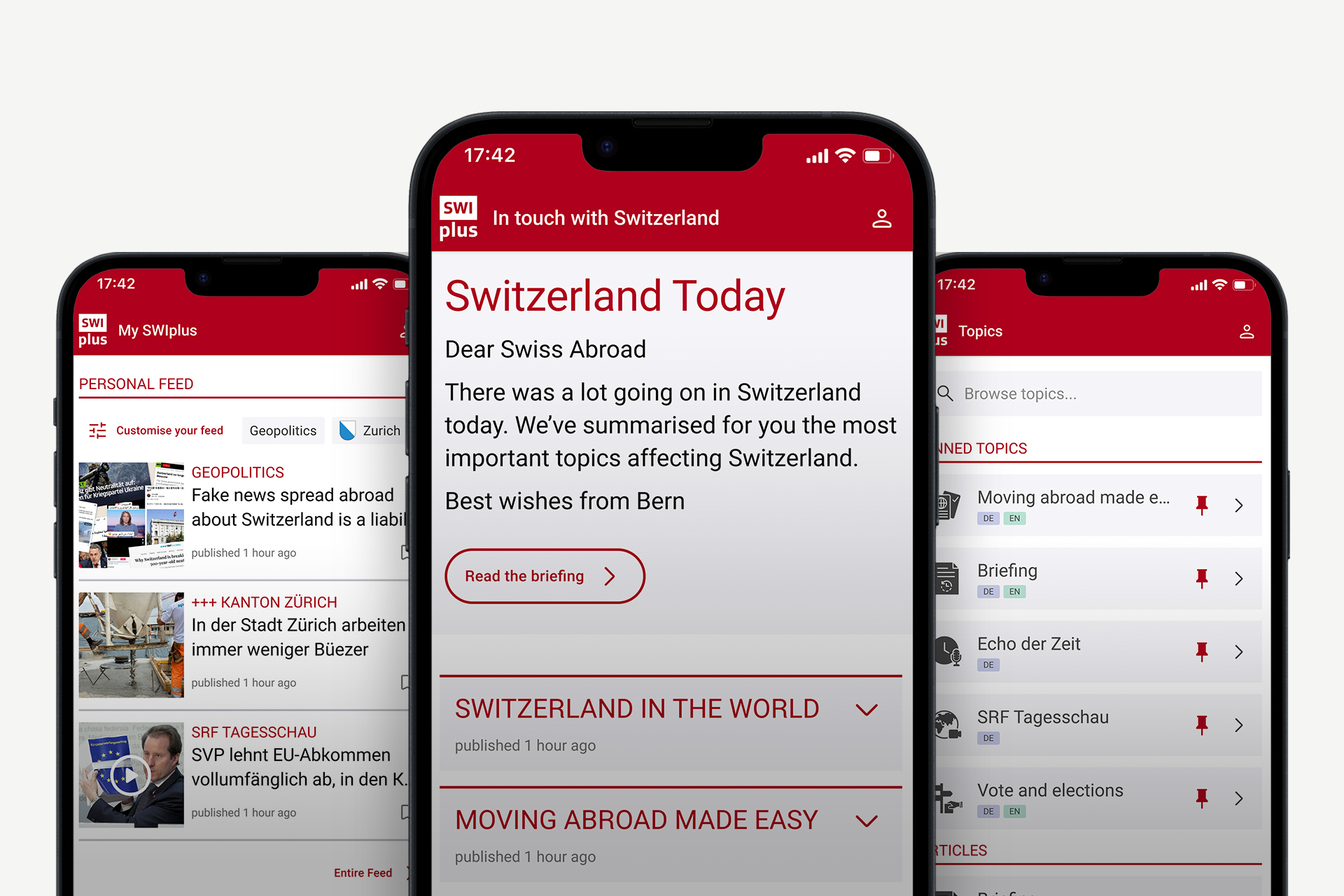

>> Read more about how Switzerland intends to address AI discrimination:

More

AI-driven discrimination: protection in Switzerland is weak

AI evolves, prejudices remain

In recent years, research has shown that algorithms systematically discriminate against women, people of colour and minorities. A 2023 Bloomberg analysisExternal link of more than 5,000 AI-generated images found that they incorporated ethnic and gender stereotypes, depicting CEOs as white men and criminals as dark-skinned.

A more recent studyExternal link from researchers at the University of Washington found that the tools used to screen candidates for jobs favour male names 85% of the time and consistently ranked men of colour last, penalising them in 100% of the tests.

It’s an alarming trend, Prietl notes, because decisions made on a large scale by algorithms affect the lives of hundreds of thousands of people. “A recruiter can make two or three biased decisions a day; an algorithm can make thousands in a second,” she points out.

AI: artificial intelligence

The set of techniques that enable machines to imitate certain human capabilities, such as learning, language or visual perception.

LLM: Large Language Model

This is an artificial intelligence model trained on huge amounts of text. It uses statistics to predict the next word in a sentence, producing consistent answers based on correlations rather than comprehension.

Chatbot

AI-based software designed to simulate conversations with users via text or voice, answer questions or perform tasks.

AI model

Mathematical structure trained on large amounts of data to recognise patterns and generate predictions, text or images.

AI system

A broader set that integrates one or more AI models with software, data and technical components (such as chatbots, recommendation systems or analysis tools), enabling many specific tasks to be performed in the real world.

Prejudices and stereotypes also in the Swiss LLM

Stereotypes around gender and sexual orientations remain deeply embedded in AI models, according to existing research and to our own tests.

ChatGPT describes women, gay and bisexual people with adjectives from the emotional sphere, such as “empathetic” and “sensitive”. Men, on the other hand, are portrayed using terms related to competence, such as “pragmatic” and “rational”. Apertus gives similar answers but stresses that “every person is unique” and it is “essential to avoid stereotypes and generalisations”.

A 2023 studyExternal link of several popular LLMs also highlighted these types of results.

Both OpenAI and the team behind Apertus claim they have tried to reduce gender bias and sexist content with methods such as data filtering. According to Bettina Messmer, one of Apertus’s main developers, these filters were applied to data before it was used to train the model, and not afterwards to correct outputs. However, Messmer says that the first version of the Swiss LLM did not specifically address gender inclusivity.

For Prietl, this shows that the AI models developers should pay more attention to bias from the beginning.

“Gender and diversity issues should be central, not a secondary thought,” Prietl says, emphasising that transparency in training data alone is not enough. Messmer adds that the Apertus team would be interested in working with experts to evaluate these aspects for future versions of the model.

Ning WangExternal link, a technology ethicist at the University of Zurich, sees a broader pattern in the development of AI: teams tend to prioritise technical and economic goals first, leaving ethical and social concerns for later.

“It’s more important [for those teams] to get the LLM out there, prioritising the technical part. Ethical and societal issues come later,” she says. While she understands the market pressures, Wang stresses that this approach misses a crucial opportunity to embed ethics into AI design from the start.

More

Artificial intelligence explained

The roots of bias in LLMs

LLMs are full of gender and ethnic biases because they are trained on historical sources and internet data, which are dominated by male or Western perspectives. But bias persists also because women and minorities are often absent from the teams making key decisions in tech development. The Apertus team, for example, comes from several countries but is mostly male.

Wang’s own experience illustrates the challenge. As a woman of ethnic minority in Europe, she has had to strategically create opportunities to have her voice taken more seriously in academia. “If we’re not even sitting at the table, how do we make our voices heard?” she asks. Her hope is that, in the future, more junior female colleagues will be able to take the stage and influence decisions, shaping AI in ways that are fairer and more inclusive.

More diversity in AI teams

Institutions and companies are taking steps to counter male dominance and encourage diverse perspectives in AI. Zinnya del Villar, director of technology, data and innovation at the US thinktank Data Pop Alliance, does this by prioritising the hiring of women on her team. “The more diversity there is in teams, the more AI will be able to accurately represent society and be safer to use,” she says.

In Switzerland, new initiatives are seeking to increase the visibility and participation of women in artificial intelligence. One of these is the publication of the list of the 100 most influential women in AI.External link

“Women in technology are not an exception, but a driving force,” says Melanie Gabriel, co-director and chief operating officer of the AI Center at the federal technology institute ETH Zurich, who contributed to the list.

Solutions against AI discrimination

Even with progress in hiring and development, how to deal with inherent biases in training data remains a large, unresolved question. For Alessandra Sala, head of artificial intelligence for the free-use image platform Shutterstock and president of the organisation Women in AI, there are a few solutions.

One option is to run automated external audits to detect errors, biases and imbalances before AI technologies are brought to market – “before they get into the hands of our children and teenagers”, she says.

Other effective measures, according to Sala, include adjusting data sets so that AI models encounter balanced representations of genders and ethnicities across professions. Sala says the model can also be told to give more weight to underrepresented cases during training, mitigating embedded biases.

When it comes to addressing these issues of fairness and bias, Sala believes that public and national AI models such as Apertus are more responsible than profit-driven corporations and can lead the way.

“I’m cheering for these teams to prove that public research can beat big tech companies,” she says. “Because it really can.”

Edited by Gabe Bullard/ts

In compliance with the JTI standards

More: SWI swissinfo.ch certified by the Journalism Trust Initiative

You can find an overview of ongoing debates with our journalists here . Please join us!

If you want to start a conversation about a topic raised in this article or want to report factual errors, email us at english@swissinfo.ch.