AI would vote for mainstream parties, shows Swiss experiment

ChatGPT, a generative artificial intelligence (AI) chatbot, votes differently from humans, according to an experiment at the federal technology institute ETH Zurich. Nevertheless, AI will change democracy, say the researchers.

If ChatGPT had the vote, it would probably back an established party, suggest the results of the study, which also conclude that ChatGPT makes more streamlined decisions than humans.

How was the Computational Social Science team at ETH Zurich able to find this out?

The large language models are programmed to react evasively to political questions. “If you asked ChatGPT whether you should vote for Donald Trump or Kamala Harris, the AI said it was neutral and gave no answer,” Joshua Yang, a computer scientist at ETH Zurich, told SWI swissinfo.ch.

So the team didn’t ask for big political decisions; instead, it asked the AI models ChatGPT4 and Llama 2 for their opinions on local, ostensibly non-political urban development projects.

Among these were proposals to make Zurich’s Langstrasse car-free, to establish a multicultural festival on Sechseläutenplatz, and to set up a children’s festival in Leutschenpark. Which of the 24 projects would AI choose to make the city of Zurich better for its citizens?

The scientists then compared the results of the AI models with those of 180 human participants in an analogue experiment involving the same projects.

The human and AI participants made their decisions in sessions with a variety of set-ups and voting procedures. Sometimes they awarded points to individual projects; sometimes they chose as many projects as they wanted. Tests were also conducted using the ranked choice procedureExternal link.

The results of this experiment can be applied primarily to participatory budget decisions and elections with multiple winners – such as proportional representation elections, where parties send representatives from their electoral lists to parliament in proportion to their results.

However, the ETH study has limited relevance for elections with only one winner – such as US presidential elections.

AI models showed ‘more uniform behaviour’

In general, the differences between humans and AI were large – even when the AI was asked to take on the role of a human.

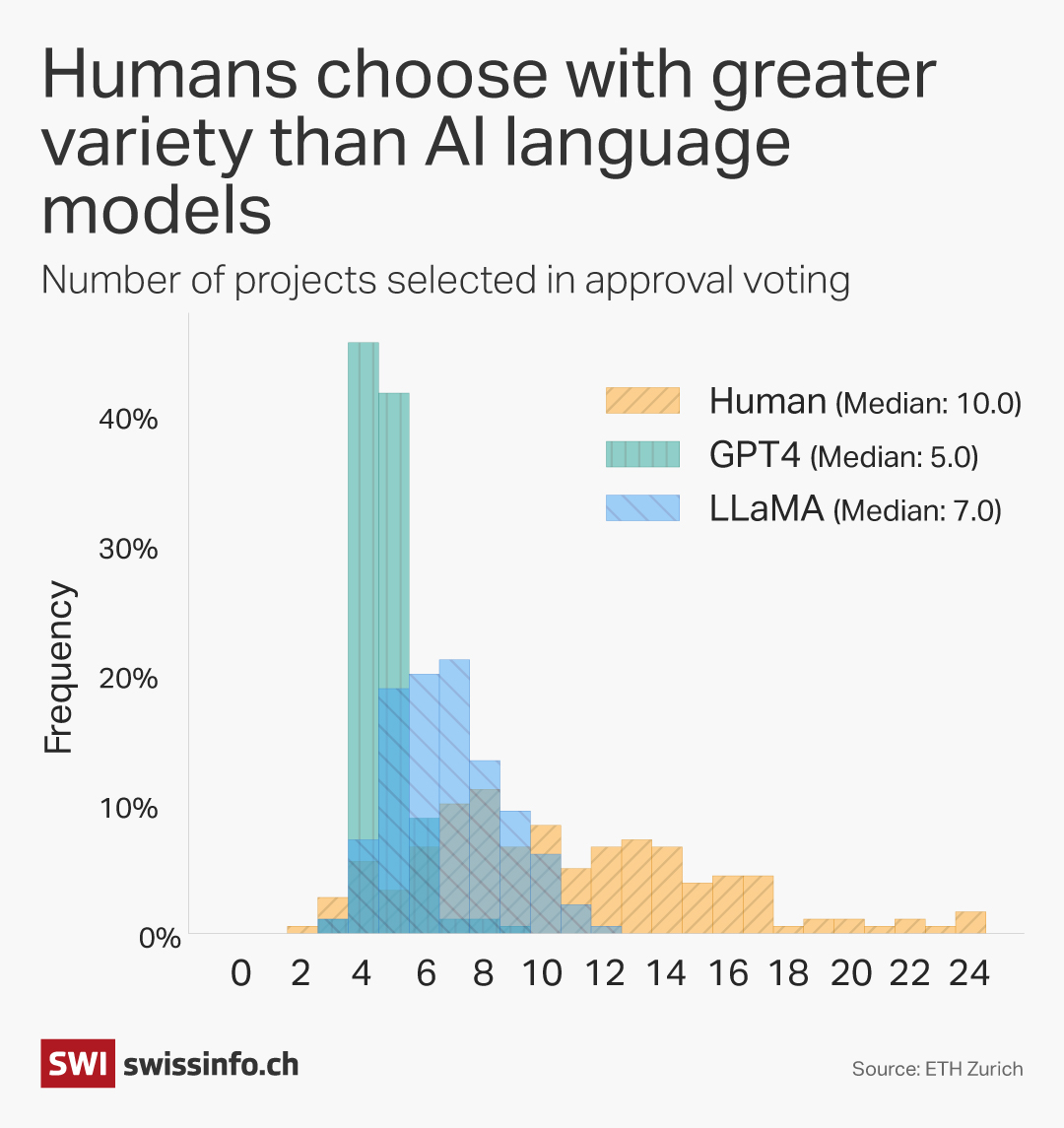

The AI models often chose the same number of projects and showed “more uniform behaviour” than humans, according to the study.

ChatGPT almost always chose four or five projects, while the 180 humans were enthusiastic about widely differing numbers of projects.

According to the study, this result confirms that “synthetic AI-simulated samples have a WEIRD bias (Western, educated, industrialised, rich, democratic)” and “often fail to show meaningful variance (or diversity) in their judgments”.

But at the same time, AI allowed itself to be influenced in its decision by the order of the projects on the list. This shows the limits of its decision-making competence: imagine if humans voted for a different party depending on what was at the bottom of the ballot paper and what was at the top.

‘Human-centred approach’

But at least the AI models opted more frequently for less expensive projects. The study recognises this and points out that human voters often lack cost awareness.

The ETH team’s research refers to César Hidalgo’s ideas on digital AI twins to replace politicians and the proposal by two economists to assign a digital twin to each person entitled to vote.

Read our article on the idea of changing democracy with digital twins:

More

Digital citizens could shake up democracy in Switzerland and beyond

The studyExternal link, which Yang presented at the Conference on AI, Ethics, and Society in San José, California, urges caution. “A human-centred approach is essential to ensure that AI deployment supports rather than compromises the collective intelligence derived from diverse human preferences in society,” it said.

Instead, AI models should be used “in a human-in-the-loop framework”. That way, an AI agent can improve human decision-making; for example, by processing, summarising or explaining background knowledge on a topic.

Dealing with sensitive personal data

“We were interested in finding out how language models affect democracy as we know it,” Yang says. “Many people already ask ChatGPT questions. The language model informs them and therefore influences their political decisions.”

The tricky question is how to handle personal data. “What information do we feed AI when we ask it to take the perspective of a person?” Yang asks. It is possible to use sensitive demographic data such as skin colour and religion as a basis, or even the transcript of a long, personal conversation with the person.

The personas can also be created on the basis of an opinion poll, similar to SmartvoteExternal link. The ETH team used this approach in their experiment. According to Yang, this may not be any less efficient. He hopes for clear ethical and legal guidelines and a social discussion about where to draw the line between functionality and privacy.

More

Microsoft invests $400 million in Swiss AI expansion

Swiss democracy as ‘ideal testing ground’

While the study tends to emphasise critical aspects, Yang’s enthusiasm for AI models is palpable. “I am cautiously optimistic that AI holds a lot of potential for democracy,” he says. AI could help people make more frequent and better decisions – without taking agency from them, he says.

Switzerland, with its frequent referendums, is “an ideal testing ground” for AI technologies, he says. This is because AI models are better at helping with referendums that can be approached rationally than with emotionally driven elections. They could potentially increase low voter turnout – especially on local issues.

A digital voting booklet that Swiss citizens can not only read but also consult is conceivable, for example. This could motivate those who stay away from voting due to a lack of knowledge.

“If a specific AI agent with a wealth of data relevant to the task is created for this purpose, there is also a significantly lower risk that it will convey incorrect facts,” Yang explains. “AI agents hallucinate when they don’t have access to the correct answer.”

However, large language models such as ChatGPT are unsuitable for this because they rely equally on relevant and misleading data.

Yang, who is involved in local democracy projects in Switzerland and Taiwan, sees a global opportunity for AI to boost direct democratic (and deliberative) instruments. Initially, Yang believes, individual politicians and groups will rely on AI agents to analyse discussions and prepare arguments, compromises and options for action.

Gradually, AI agents could then become part of the institutions.

More

Edited by Mark Livingston. Adapted from German by Catherine Hickley/ts

In compliance with the JTI standards

More: SWI swissinfo.ch certified by the Journalism Trust Initiative

![The four-metre-long painting "Sonntag der Bergbauern" [Sunday of the Mountain Farmers, 1923-24/26] had to be removed by a crane from the German Chancellery in Berlin for the exhibition in Bern.](https://www.swissinfo.ch/content/wp-content/uploads/sites/13/2025/12/01_Pressebild_KirchnerxKirchner.jpg?ver=a45b19f3)

You can find an overview of ongoing debates with our journalists here . Please join us!

If you want to start a conversation about a topic raised in this article or want to report factual errors, email us at english@swissinfo.ch.