Switzerland aspires to build ‘human’ artificial intelligence

Swiss developers have entered the global race to build artificial intelligence (AI) that is capable of “thinking” like humans. Some of them claim to be on the verge of a breakthrough. Is this really the case?

Since the public launch of ChatGPT in 2022, millions of people have become used to interacting with AI as if it were a person. But so far, no AI tool has proven to be intelligent in the “human” sense of the term.

The most popular public-facing AI platforms are Large Language Models (LLMs) that make predictions based on recurrent patterns learned from massive amounts of data. Most are unable to learn in real time and adapt to new information the way the human mind does.

“They give us the illusion that they are as intelligent as we are, but this is just statistical imitation, not true understanding,” says Torsten Hoefler, a professor in the department of computer science at the federal technology institute ETH Zurich.

To bridge the gap between humans and machines, companies and research institutes around the world are racing to develop artificial general intelligence (AGI), defined as human-level understanding and adaptability. AGI is the goal of many in the AI field because it would allow machines to perform virtually any task with the accuracy and flexibility of a human being.

In Switzerland, some developers think they are close to developing AI with human intelligence. But some experts question whether LLMs or similar models could ever be enough to get there, and whether “human” AI is even a desirable goal.

Testing machine intelligence

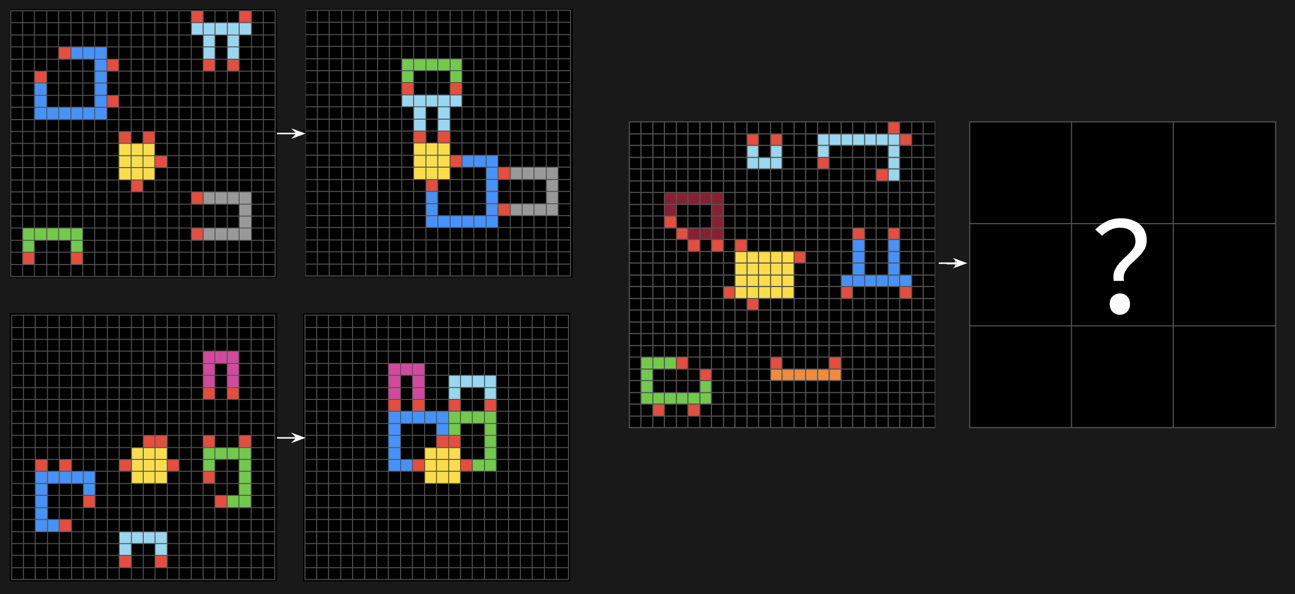

At the centre of the race is a Swiss start-up, Giotto.ai, which currently leads the ARC PrizeExternal link, a global competition that measures how close AI systems are to human-like reasoning through a series of visual puzzles. At the time of writing, Giotto.ai reported solving 27.08% of the puzzles, outperforming very popular large language models such as Grok 4 and GPT-5. The current leg of the competition ends on November 3.

The Abstraction and Reasoning Corpus (ARC)External link is one of the world’s leading benchmarks for measuring progress toward artificial general intelligence. It consists of visual puzzles that test an AI’s ability to reason abstractly and generalise from examples – tasks that humans can typically solve relatively easily but that remain difficult for current AI systems.

The Davos-based research institute Lab42 is also pursuing artificial general intelligence by supporting programmers around the world who are trying to solve the ARC tests with their own projects. In 2024, the institute reportedExternal link it set a new world record, with one of its teams solving 34% of the ARC tests in an unofficial challenge.

>> Read more about Lab42’s aim to become an AGI hub in the Alpine town of Davos:

More

A ‘neutral’ hub for artificial intelligence in the Swiss Alps

While this performance is impressive, it is still far below human intelligence. ARC test creator François Chollet saysExternal link a “smart” human being should be able to solve more than 95% of the quizzes without any training.

Giotto.ai and Lab42 believe that winning the competition would show they are close to creating a technology that works like the human brain – creative, capable of learning in real time, and quick to acquire new skills.

LLMs are not intelligent enough

Marco Zaffalon, scientific director of the Dalle Molle Institute for artificial intelligence (IDSIA) in Lugano, southern Switzerland, believes AGI is beyond the reach of current AI models, no matter how well they might do on certain tests.

“Most of today’s large language models have nothing really intelligent about them – they recognise patterns, but don’t understand causes,” he says. Without an understanding of the laws of cause and effect, Zaffalon says AI remains confined to the basic intelligence of correlations.

A truly intelligent system would be capable of imagining alternative scenarios and asking, “what would have happened if …”. LLMs may appear to do this, Zaffalon adds, but only because they are good at imitating patterns found in texts written by humans.

The problem with reaching AGI, according to Zaffalon, is that most of Big Tech today prefers to improve their models with big data and engineering shortcuts, instead of seeking a true scientific revolution in making AI more like a human brain. “Real intelligence would require a totally new approach to AI, one that complements existing models,” Zaffalon says.

The rise of reasoning models

Some researchers believe that a new generation of AI models – known as reasoning systems – could help to overcome some of these limitations.

These systems also use statistical prediction, but they attempt to simulate human thought by breaking complex problems into smaller pieces and solving them sequentially.

Hoefler of the ETH Zurich says they can tackle more complex problems, especially when paired with LLMs. His group is actively working to improve their performance, with results the scientist describes as “almost human-like”.

Companies around the world, including Giotto.ai, are hoping that reasoning models will steer them toward AGI. Giotto.ai says its system is far smaller and more efficient than LLMs like Grok 4 or GPT-5. But how exactly it achieves its results isn’t yet clear. The company has not publicly disclosed the technical details of its approach and plans to publish a report at the end of the competition.

AI: artificial intelligence

The set of techniques that enable machines to imitate certain human capabilities, such as learning, language or visual perception.

LLM: Large Language Model

This is an artificial intelligence model trained on huge amounts of text. It uses statistics to predict the next word in a sentence, producing consistent answers based on correlations rather than comprehension.

AGI: Artificial General Intelligence

Form of artificial intelligence capable of understanding, learning and adapting like a human being, performing any cognitive task without specific training.

Is Switzerland one step closer to general artificial intelligence?

If Giotto.ai’s AI were to win the ARC Prize over the American giants, it would be a remarkable achievement for Switzerland. But it remains unclear how a model capable of solving puzzles can be applied in practice. “A human being can do much more than solve puzzles,” Hoefler says.

Interviewed by Swissinfo, CEO Aldo Podestà says if Giotto.ai meets its goal of getting close to general intelligence within the next year, any application will be possible.

IDSIA’s Zaffalon remains sceptical of such claims. He doubts that systems based mainly on large language models and statistical prediction could ever reach human-level intelligence. And he points out that similar big promises from companies like OpenAI and Anthropic have so far proved to be little more than hype to attract large amounts of capital. None of these companies, says Zaffalon, has been able to explain how to overcome fundamental challenges such as causal reasoning.

“With these models, human intelligence will remain far on the horizon unless they are complemented by AIs that understand the world and reason about it through causality, which is still not the case today,” he says.

Chinese scientist Song-Chun Zhu, one of the world’s leading AI experts and director of the Beijing Institute for General Artificial Intelligence, also emphasises in an email the need to develop radically different AI technologies capable of truly understanding the laws of cause and effect and not using prediction.

Human-level AI seductive but risky

Human-level AI still seems to be out of reach. And for many researchers, that is not a bad thing. AI models that blur the boundaries between humans and machines are problematic on an ethical level, says Peter G. Kirchschläger, a professor of ethics at the University of Lucerne and a visiting professor at ETH Zurich.

“The risk is not that machines imitate us,” he says, “but that they end up replacing us in making decisions without anyone being accountable”.

For Kirchschläger, AI holds great potential, for instance to advance scientific research or solve everyday problems more sustainably. But he insists it is crucial to keep the distinction between humans and machines alive, so that every choice remains anchored in the responsibility of the individual.

“Decisions must remain in the hands of a human being: machines should limit themselves to executing them,” he says.

Edited by Gabe Bullard/gw

More

In compliance with the JTI standards

More: SWI swissinfo.ch certified by the Journalism Trust Initiative

You can find an overview of ongoing debates with our journalists here . Please join us!

If you want to start a conversation about a topic raised in this article or want to report factual errors, email us at english@swissinfo.ch.